Why Chips are the Core Competitiveness Behind ChatGPT?

Introduction

Recently, the generative model headed by ChatGPT has become a new hotspot of artificial intelligence. Microsoft and Google in Silicon Valley have invested heavily in such technologies (Microsoft invested $10 billion in OpenAI behind ChatGPT, and Google also recently released a self-developed BARD model), and Internet technology companies such as Baidu in China have also expressed that they are developing such technologies and will be launched shortly.

Catalog

Ⅲ What is the Impact of ChatGPT on the Semiconductor Market?

Ⅳ What is the Relation Between Chips and ChatGPT?

ⅠWhat is ChatGPT?

The ChatGPT craze is sweeping the world. ChatGPT (Chat Generative Pre-trained Transformer) is a dialogue AI model launched by OpenAl in December 2022. It has attracted widespread attention since its launch. Its monthly active users reached 100 million in January 2023, the fastest-growing monthly active users in history. consumer applications. Based on the question-and-answer mode, ChatGPT can perform reasoning, code writing, text creation, etc. Such special advantages and user experience have greatly increased the traffic of application scenarios.

Figure1-ChatGPT

Ⅱ ChatGPT Models

The models represented by ChatGPT have a common feature, that is, they use massive data for pre-training, and are often paired with a relatively powerful language model. The main function of the language model is to learn from a large number of existing corpora. After learning, it can understand the user's language instructions, or further generate relevant text output according to the user's instructions.

2.1 Language Model

Models can be roughly divided into two categories, one is language models and the other is image models. The language model is represented by ChatGPT. As mentioned above, its language model can not only learn to understand the meaning of user instructions but also generate relevant text according to the user's instructions after training with massive data (in the above example, write a poem in the style of Li Bai). This means that ChatGPT needs to have a large enough language model (Large Language Model, LLM) to understand the user's language, and can have high-quality language output - for example, the model must be able to understand how to generate poetry, how to generate Li Bai style poetry and more. This also means that large language models in language-based generative AI need very many parameters to complete this type of complex learning and remember so much information. Taking ChatGPT as an example, its parameter volume is as high as 175 billion (using standard floating-point numbers will take up 700GB of storage space), and its language model is "big".

Figure2-Language Model

2.2 Image Model

Another type of model is the image model represented by Diffusion. Typical models include Dalle from OpenAI, ImaGen from Google, and the most popular Stable Diffusion from Runway AI. This type of image generation model also uses a language model to understand the user's language instructions and then generates high-quality images based on this instruction. Different from the language generation model, the language model used here mainly use language to understand user input without generating language output, so the number of parameters can be much smaller (on the order of hundreds of millions), while the number of parameters of the image diffusion model is relatively Generally speaking, the amount of parameters is on the order of billions, but the amount of calculation is not small, because the resolution of the generated image or video can be very high.

Ⅲ What is the Impact of ChatGPT on the Semiconductor Market?

ChatGPT Model Requirements for Chips

As mentioned earlier, the generation model represented by ChatGPT needs to learn from a large amount of training data to achieve high-quality generation output. To support high-efficiency training and reasoning, generative models also have their requirements for related chips.

The first is the demand for distributed computing. Language generation models such as ChatGPT have hundreds of billions of parameters. It is almost impossible to use stand-alone training and reasoning but must use a large number of distributed computing. When performing distributed computing, there is a great demand for data interconnection bandwidth between machines and computing chips for this type of distributed computing (such as RDMA), because many times the bottleneck of tasks may not be computing, but In terms of data interconnection, especially in such large-scale distributed computing, the high-efficiency support of chips for distributed computing has become the key.

The Second is memory capacity and bandwidth. Although distributed training and reasoning for language generation models are inevitable, the local memory and bandwidth of each chip will also largely determine the execution efficiency of a single chip (because the memory of each chip is used to the limit). For the image generation model, the model (about 20GB) can be placed in the memory of the chip, but with the further evolution of the image generation model in the future, its memory requirements may also be further increased. From this perspective, the ultra-high bandwidth memory technology represented by HBM will become an inevitable choice for related acceleration chips, and the generation of class models will also accelerate the further increase of capacity and bandwidth of HBM memory. In addition to HBM, new storage technologies such as CXL plus software optimization will also increase the capacity and performance of local storage in such applications, and it is estimated that more industrial adoption will be gained from the rise of generative models.

Finally, whether it is language or image generation models, Calculations are essential and great. As the generation resolution of image generation models is getting higher and higher and moving towards video applications, the demand for computing power may be increased— —The calculation amount of the current mainstream image generation model is about 20 TFlops, and with the trend toward high resolution and images, the computing power requirement of 100-1000 TFLOPS is likely to be the standard.

The exponentially growing demand for chips and semiconductors behind ChatGPT No matter from the perspective of technical principles or operating conditions, ChatGPT needs strong computing power as a support, which will drive a substantial increase in scene traffic. In addition, ChatGPT’s demand for high-end chips will also increase. The average chip price, volume, and price have risen leading to skyrocketing demand for chips; in the face of exponential growth in computing power and data transmission needs, GPU or CPU+FPGA chip manufacturers and optical module manufacturers that can provide them will soon usher in the blue ocean market.

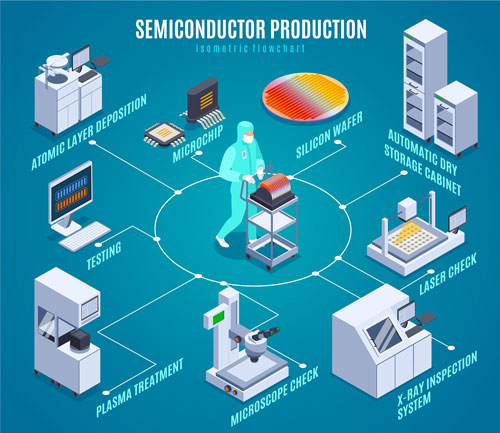

Figure3-semiconductor

Ⅳ What is the Relation Between Chips and ChatGPT?

▲ Chip demand = quantity↑x price↑

1) Quantity: The new scene brought by AIGC + the flow of the original scene has greatly increased

①Technical principle: ChatGPT is a dialogue AI model developed based on the GPT3.5 architecture. After GPT-1/2/3 iterations, after the GPT3.5 model, it began to introduce code training and instruction fine-tuning, and added RLHF technology (human feedback enhancement chemistry), so as to realize the ability evolution. As a well-known NLP model, GPT is based on Transformer technology. As the model continues to iterate, the number of layers increases, and the demand for computing power increases.

②Operating conditions: Three conditions for the perfect operation of ChatGPT: training data + model algorithm + computing power. Among them, the training data market is vast, the technical barriers are low, and it can be obtained after investing enough manpower, material, and financial resources; the basic model and model tuning have low demand for computing power, but obtaining the ChatGPT function requires large-scale pre-processing on the basic model. The ability to train and store knowledge comes from 175 billion parameters, which requires a lot of computing power. Therefore, computing power is the key to the operation of ChatGPT.

2) Price: The demand for high-end chips will drive the average price of chips

The cost of purchasing a top-level Nvidia GPU is 80,000 yuan, and the cost of a GPU server usually exceeds 400,000 yuan. For ChatGPT, at least tens of thousands of Nvidia GPUA100s are needed to support its computing infrastructure, and the cost of model training exceeds 12 million US dollars. From the perspective of the chip market, the rapid increase in chip demand will further increase the average price of chips. At present, OpenAI has launched a $20 subscription model, initially building a high-quality subscription business model, and the ability to continue to expand in the future will be greatly improved.

"Hero behind" is supported by computing power such as GPU or CPU+FPGA

- GPU can support strong computing power requirements.

Specifically, from the perspective of AI model construction: the first stage is to build a pre-training model with super-large computing power and data; the second stage is to conduct targeted training on the pre-training model. Due to its parallel computing capability, GPU is compatible with training and reasoning, so it is widely used at present. At least 10,000 Nvidia GPUs have been imported into the ChatGPT training model (AlphaGO, which was once popular for a while, only needs 8 GPUs), and the reasoning part uses Microsoft's Azure cloud service also requires GPUs to operate. Therefore, the hot rise of ChatGPT can be seen in the demand for GPU.

- CPU+FPGA

From the perspective of deep learning, although GPU is the most suitable chip for deep learning applications, CPU and FPGA cannot be ignored. As a programmable chip, the FPGA chip can be expanded for specific functions and has a certain room for development in the second stage of AI model construction. However, if FPGA wants to realize the deep learning function, it needs to be combined with the CPU to be applied to the deep learning model, which can also achieve huge computing power requirements.

- Cloud Computing Relies on Optical Modules to Realize Device Interconnection

The AI model is developing towards a large-scale language model led by ChatGPT, driving the increase in data transmission volume and computing power. With the growth of data transmission volume, the demand for optical modules as the carrier of equipment interconnection in the data center increases accordingly. In addition, as computing power increases and energy consumption increases, manufacturers seek solutions to reduce energy consumption and promote the development of low-energy optical modules. Conclusion: ChatGPT is an emerging super-intelligent dialogue AI product. Whether it is from the perspective of technical principles or operating conditions, ChatGPT needs strong computing power as a support, which will drive a substantial increase in scene traffic. In addition, ChatGPT’s demand for high-end chips has increased. It will also drive up the average price of chips, and the increase in volume and price will lead to skyrocketing demand for chips; in the face of exponentially growing computing power and data transmission needs, GPU or CPU+FPGA chip manufacturers and optical module manufacturers that can provide them will soon usher in an unknown market Condition.

Ⅴ ChatGPT FAQ

1. Can ChatGPT access the internet?

A search engine indexes web pages on the internet to help the user find the information they asked for. ChatGPT does not have the ability to search the internet for information.

2. Does ChatGPT keep history?

While ChatGPT is able to remember what the user has said earlier in the conversation, there is a limit to how much information it can retain. The model is able to reference up to approximately 3000 words (or 4000 tokens) from the current conversation - any information beyond that is not stored.

3. Does ChatGPT log conversations?

The world may now more easily collect and share the AI's responses thanks to this extension. With ChatGPT, Chat Logs record the entire conversation and create a URL to share it with others. You can share the URL immediately rather than taking numerous screenshots of the AI chatbot dialogue (like this one).

4. Does ChatGPT have a word limit?

I think ChatGPT could be very useful to create the boilerplate to a script's character dialog but 600 words is just not nearly enough. One of the most maddening things about ChatGPT is that it swears it is absolutely unable to do a number of things that it will happily do if you word the request differently.

A Comprehensive Guide to Grasping FPGA Structure6/20/2024 869

A Comprehensive Guide to Grasping FPGA Structure6/20/2024 869FPGA (Field-Programmable Gate Array) is an integrated circuit, a type of programmable chip, that allows engineers to program custom digital logic. It can change its hardware logic based on the program, with the primary purpose of enabling engineers to redesign and reconfigure their chips faster and cheaper, whenever they want. However, nothing in the world is ideal, and FPGA chips also have limitations!

Read More > The EU to Impose Tariffs on Electric Vehicle Imports from China in Early July6/17/2024 414

The EU to Impose Tariffs on Electric Vehicle Imports from China in Early July6/17/2024 414The EU to Impose Tariffs on Electric Vehicle Imports from China in Early July

Read More > What is XC7A100T-2FG484I?6/6/2024 620

What is XC7A100T-2FG484I?6/6/2024 620XC7A100T-1CSG324C is an FPGA-based digital signal processing board, which consists of Xilinx's Virtex-7 series chips and FPGA interface chips.

Read More > Analog cycle inventory hits bottom, AI drives flash memory demand to continue6/4/2024 653

Analog cycle inventory hits bottom, AI drives flash memory demand to continue6/4/2024 653Analog cycle inventory hits bottom, AI drives flash memory demand to continue

Read More >

Hot News

- Electronic Component Symbols: Resistor, Capacitor, Transformers and Connectors

- Diode Overview: Application in Automotive Alternator Rectifiers

- Ultra-low power consumption of STM32U575/585 microcontrollers(MCU)

- Voltage-Controlled Oscillator: Principle, Type Selection, and Application

- What is Xilinx 7 Series FPGA Clock Structure- -Part two

- Zedboard zynq-7000: Zynq 7000 datasheet, Features, Architecture and Core Components